Evolutionary Robotics

The attempt to artificially evolve robots by mimicking natural evolution is known as Evolutionary Robotics (ER). the design targets of artificial evolution in ER are generally the rules that govern the robot's behaviour and the parameters of the controller. When artificial neural networks (ANNs) are used as controllers, their synaptic connection strength and network structure are design targets. In particular, ERs using structurally evolved ANNs are considered to have a wide range of applications. In this project, the evolutionary dynamics of artificial evolution of robots is analysed and applied to swarm robot systems.

Evolutionary Copmutation

Evolutionary computation is a computational method that attempts to achieve a desired specification or performance by means of algorithms that mimic the mechanisms of biological evolution. It is an optimisation method that searches for a solution from a population, rather than a local search that finds a solution from one point to another. The main features are that evolutionary computation can be applied regardless of the continuity and differentiability of the objective function, and that it uses stochastically varying laws. Most of the work at the heart of this methodology is prominent Darwinian theory, and in particular, the majority of methods have three basic building blocks

- Adaptation: determines the extent to which an individual affects future generations

- Reproduction: individuals produce offspring in the next generation

- Genetic manipulation: determining the genetic information of the offspring from the genetic information of the parents.

- Genetic Algorithm

Proposed by Holland, J. H. in 1975 and extended by Goldberg, D. E., GA is currently the most widely studied of evolutionary computation. In GAs, the set of solutions to an optimisation problem corresponds to a population, and genetic manipulations such as crossover and mutation are used to generate the next generation of populations from the current population. In this case, a mechanism similar to natural selection is used to facilitate the selection of good solutions, i.e. solutions with a high degree of adaptation. Genetic manipulation (crossover, mutation) using the selected solution as a parent produces the next generation of solutions. This repetition corresponds to the generation of generations in evolution, and GAs are optimised according to this law. The most basic GAs use binary to represent the solution, but in our research we use Real Coded Genetic Algorithm (Real Coded Genetic Algorithm) for evolutionary robotics, which uses a real-valued vector to represent the solution and allows optimisation in a continuous space. - Evolutionary Strategy

ES is a method inspired by the development of asexual reproductive organisms. It was developed and evolved by Rechenberg, I. and Schwefel, H. P. in the 1960s. From the outset, the main focus has been on non-linear optimisation in continuous space, so the individuals are represented by real-valued vectors. Although ES introduces the concept of natural selection in GA, it focuses on self-adaptation based on the search history of individuals, with mutation as the main search tool. Various extensions have been studied to date, with CMA-ES (Evolution Strategy with Covariance Matrix Adaptation), which uses a covariance matrix for self-adaptation, attracting attention.

Application of Large-Scale Computing Technology

When the controller of each robot in our swarm robot system is composed of an evolutionary artificial neural network, an enormous amount of computation is required to obtain the desired behaviour by artificial evolution. Therefore, we aim to significantly reduce the computation time using grid computing, GPU computing, supercomputing and cloud computing.

- Grid Computing

This is a generic term for technologies and concepts that realise large-scale parallel and distributed processing by interconnecting multiple heterogeneous computers via a network. When the controller of each robot in our multi-robot system problem is composed of an evolutionary artificial neural network, an enormous amount of computation is required to obtain the desired behaviour through artificial evolution. Therefore, we aim to significantly reduce the computation time using grid computing.

- GPU Computing

The GPU is a graphics processing device that performs parallel and distributed processing, and contains a large number of simple processors, often more than a thousand. The advantages of this approach include a better price/performance ratio than CPUs and the fact that the computing performance is still improving. GPU computing in our laboratory uses a comprehensive development environment for GPUs called CUDA.

from http://www.nvidia.co.jp

- Supercomputer

They are used for tasks that require advanced computing and often employ CPUs for high-performance computing. It boasts high computing performance by having many computation nodes. In our laboratory, we have focused on distributed shared-memory computing (SMP Cluster), in which multiple shared-memory computing nodes are connected via a network, and have conducted implementation experiments.

- Cloud Computing

It is a form of computing in which the services provided by servers on the network can be used without being aware of the group of those servers. It allows these computing resources to be used promptly, while minimising management effort and the intermediary work of service providers. The system can use these computational resources promptly, while minimising management effort and the intermediation of service providers. It has attracted attention in recent years because of its advantages such as the ability to use resources as and when required and to save on server maintenance costs.

Reinforcement Learning

Reinforcement learning methods based on real-life examples

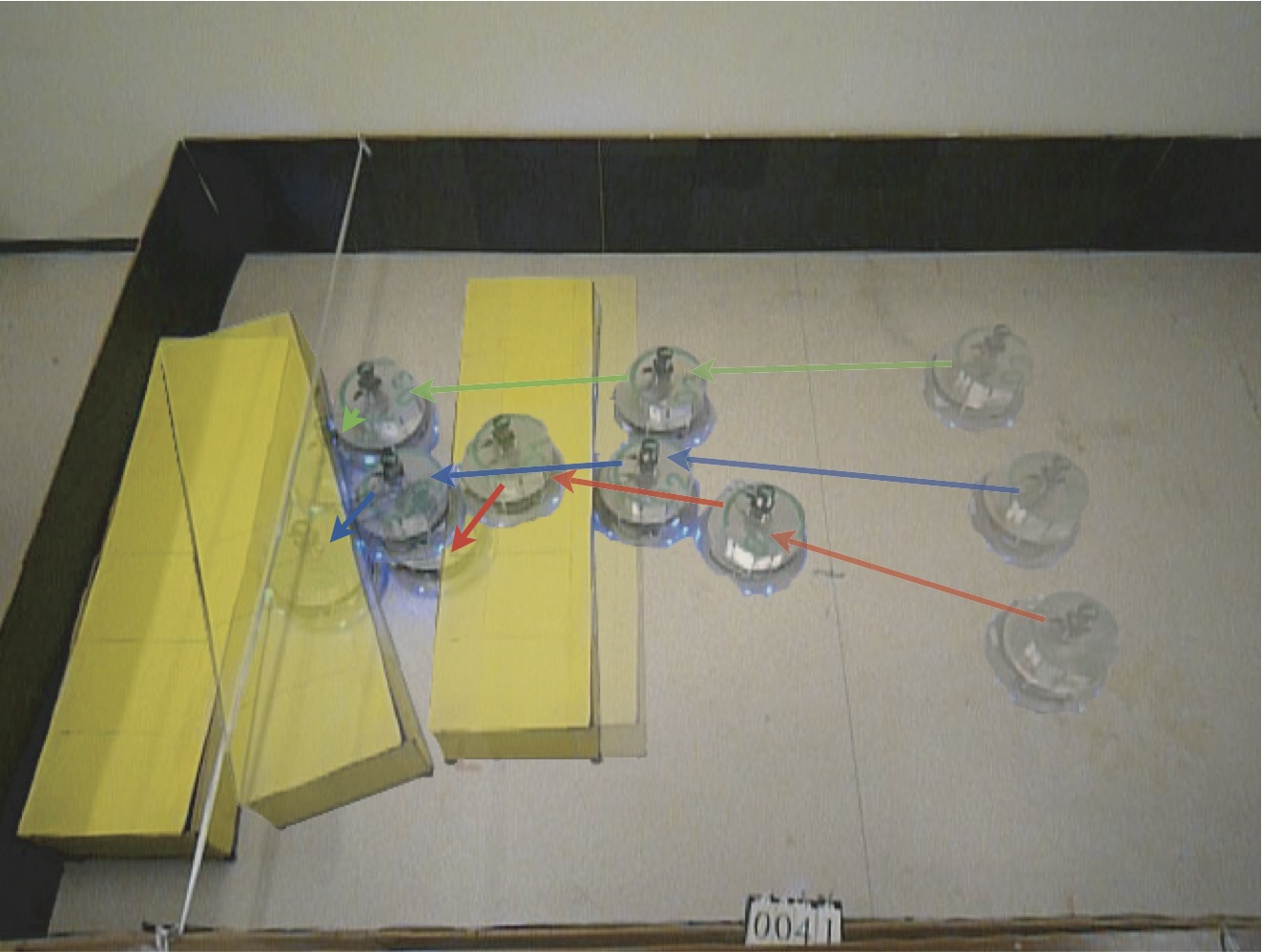

Reinforcement learning is a learning theory inspired by behavioural changes in animals observed in psychological experiments. In reinforcement learning, there is no teacher to explicitly indicate the correct output for the input, but instead learning proceeds based on quantified information called reinforcement signals (rewards and punishments). In other words, no a priori knowledge is required, and even if the dynamics of the environment are unknown, the robot can autonomously acquire the way to reach the target state through trial-and-error if only given the target state. Furthermore, it can handle phenomena such as environmental uncertainty and delayed rewards. Because of these features, reinforcement learning is used to acquire the behaviour of intelligent agents, such as autonomous robots, and when the environment is Markovian, it is guaranteed to acquire the optimal solution through infinite trials. Our research group proposes a reinforcement learning method Bayesian-discrimination-function-based Reinforcement Learning (BRL) with the ability to autonomously partition continuous state/action spaces, aiming at action acquisition for a group of autonomous robots operating in a real environment. (BRL), which has the ability to autonomously partition the continuous state/action space, has been proposed. So far, BRL has been successfully used as a controller to acquire the cooperative behaviour of multiple arm-type robots and mobile robots. However, it was observed in subsequent experiments that over-learning makes it difficult to identify inexperienced states, and the acquired robots' behaviour may become unstable. To address this problem, we have extended BRL using the Support Vector Machine (SVM), a discriminator with high discriminative performance, and confirmed that the unstable behaviour can be improved.

Human-Machine Cooperative System

In recent years, robots have entered fields that are in close contact with human society, such as nursing care and medical care, due to the remarkable development of technology. In this context, research on human-machine cooperation systems, in which humans and machines work together, has attracted much attention.

Important issues in dealing with these human-machine cooperative systems include how to ensure the safety of humans working together and how the roles of humans and robots should be shared. In addition to safety, research on the appropriate design of role-sharing is required, as it may lead to mistrust or overconfidence in the system by the user, depending on the design method.

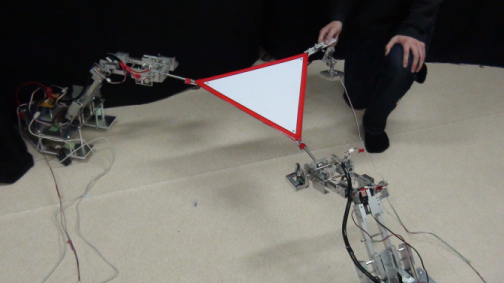

Our research group has proposed the application of a multi-robot system (MRS) to address these problems. Compared to the approach of increasing the performance of a single robot, the MRS is expected to solve tasks even if each robot is inefficient, thus minimising the risk to humans. The concept of autonomous functional differentiation, a type of adaptive function allocation, has been introduced into MRS to develop a system that dynamically allocates roles according to the situation, with the aim of reducing the automation surprises that users may face.

Biomimetics

Social organisms are known to cooperate with one another to achieve tasks that would be impossible individually, demonstrating robust and flexible behaviors at the group level. One example of this behavior is the flocking of birds or schooling of fish. Flocking behavior involves numerous individuals moving together in a coordinated direction, commonly observed in nature. According to behavioral ecology, each individual within a flock acts selfishly, without considering the group or species. Despite this theory, it is believed that animals engage in flocking for various advantages unattainable alone, such as increased survival rates, more accurate migration, and reduced energy costs. Thus, organisms have acquired excellent behaviors through the process of evolution. Given this background, mimicking and applying biological flocking behavior is highly meaningful from an engineering perspective.

This research proposes a method for generating flocking behavior based on insights from biology. Specifically, it constructs flocking behavior rules based on duck flocking models and decision-making models within biological populations. The behavior of robot swarms generated according to these rules will be quantitatively evaluated to verify the characteristics of flocking behavior.

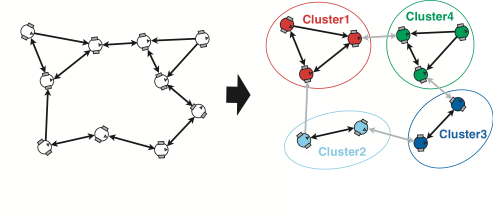

Clustering

Although there have been studies on the generation of robot swarm behaviour in SR, to the best of our knowledge there have been no previous studies on its analysis. This is probably because the analysis of swarm behaviour is very difficult due to the complexity and hyper-redundancy of SR systems. However, the analysis of swarm behaviour is essential to confirm the characteristics and effectiveness of SR. Our research group has proposed a method for the analysis of SR swarm behaviour. In general, many social insects perform task allocation adaptively according to the situation as a whole group in order to achieve efficient task accomplishment or task accomplishment beyond their own ability. Based on this, we focus on task allocation as an approach to analysing swarm behaviour in SR. The cooperative behaviour of SR is deeply related to the local information links between robots. It is assumed that the co-operative behaviour of SR is deeply related to the information connections between local robots. Therefore, we consider a group of robots as a kind of network using information links, and construct a group behaviour analysis method for SRS based on complex network theory. Furthermore, we believe that the analysis methods used in animal behaviour studies, which deal with actual social organisms, are also effective for robot swarms, and we are attempting to apply the analysis methods used in animal behaviour studies.